Completed as part of my CSE 540: Machine Learning and Society course.

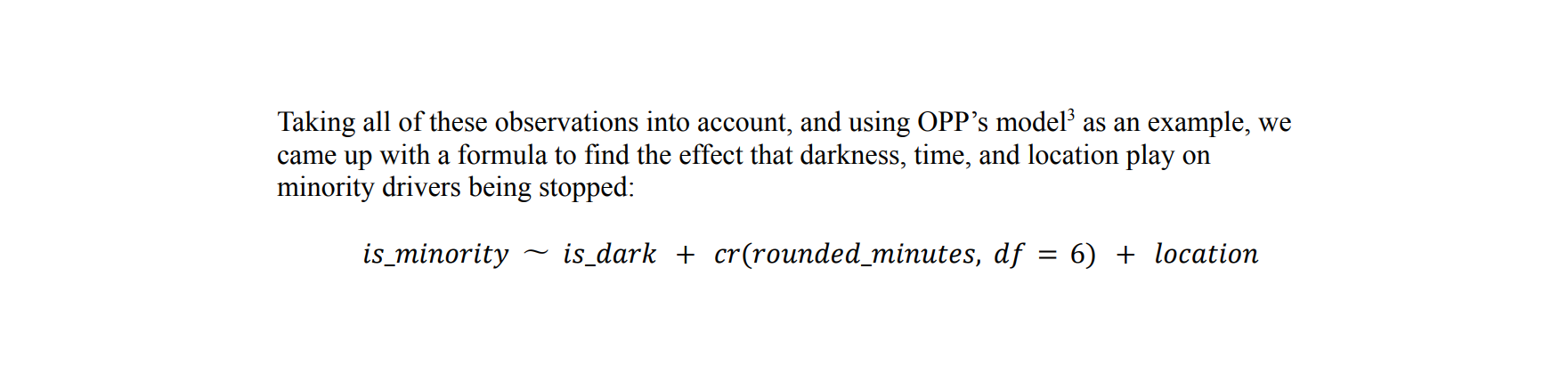

In order to understand how traffic stops can be racially biased and identify bias in the data, we settled on the veil of darkness test after much deliberation. We originally planned to do our study on hot spot policing, but we ran into issues analyzing the data. Veil of darkness, on the other hand, was much more approachable. Crafted by researchers Grogger and Ridgeway in 2006, the veil of darkness test uses the assumption that traffic stops after dark should have a smaller ratio of black drivers stopped because police cannot racially identify drivers when there is less daylight. Using the veil of darkness test and the data analyzed using the Stanford Opening Policing Project, we were able to determine that there is bias in traffic stops.

The technologies used include Python, scikit-learn, and the sunrise-sunset API.